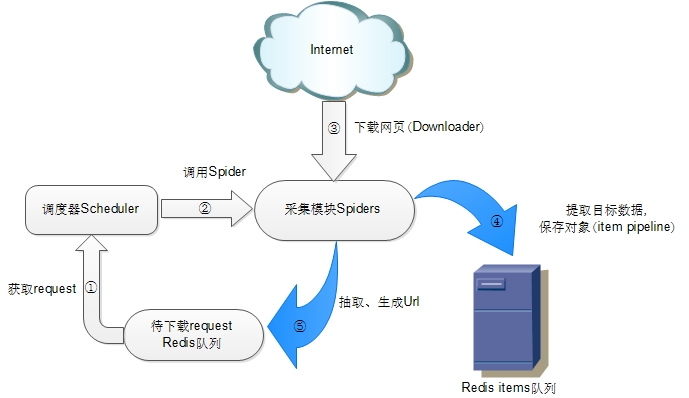

scrapy-redis хЎчАххИхМчЌшЋ

scrapy-redis цЖц

1яМхЎшЃ

scrapy-redis

pip install scrapy-redis

2яМхЏчЈsettings.pyщщЂччЛфЛЖ

цГЈцsettingsщщЂчфИццГЈщфМцЅщяМцЂцшБц

# хЏчЈхЈredisфИшАхКІххЈшЏЗцБщху

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# фИшІцИ

чredisщхяМх

шЎИцх/цЂхЄчЌчНу

SCHEDULER_PERSIST = True

# цхЎцхКчЌххАхцЖфНПчЈчщхяМщЛшЎЄцЏцч

ЇфМх

чКЇцхК

SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderPriorityQueue'

# хЏщчх

шПх

хКцхК

# SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderQueue'

# хЏщчхшПх

хКцхК

# SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderStack'

# хЊхЈфНПчЈSpiderQueueцш

SpiderStackцЏццчхцА,яМцхЎчЌшЋх

ГщчцхЄЇчЉКщВцЖщД

SCHEDULER_IDLE_BEFORE_CLOSE = 10

# цхЎRedisPipelineчЈфЛЅхЈredisфИфПхitem

ITEM_PIPELINES = {

'example.pipelines.ExamplePipeline': 300,

'scrapy_redis.pipelines.RedisPipeline': 400

}

# цхЎredisчшПцЅхцА

# REDIS_PASSцЏцшЊхЗБх фИчredisшПцЅхЏч яМщшІчЎхфПЎцЙscrapy-redisчцКфЛЃч фЛЅцЏцфНПчЈхЏч шПцЅredis

REDIS_HOST = '127.0.0.1'

REDIS_PORT = 6379

# Custom redis client parameters (i.e.: socket timeout, etc.)

REDIS_PARAMS = {}

#REDIS_URL = 'redis://user:pass@hostname:9001'

#REDIS_PARAMS['password'] = 'itcast.cn'

LOG_LEVEL = 'DEBUG'

DUPEFILTER_CLASS = 'scrapy.dupefilters.RFPDupeFilter'

#The class used to detect and filter duplicate requests.

#The default (RFPDupeFilter) filters based on request fingerprint using the scrapy.utils.request.request_fingerprint function. In order to change the way duplicates are checked you could subclass RFPDupeFilter and override its request_fingerprint method. This method should accept scrapy Request object and return its fingerprint (a string).

#By default, RFPDupeFilter only logs the first duplicate request. Setting DUPEFILTER_DEBUG to True will make it log all duplicate requests.

DUPEFILTER_DEBUG =True

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, sdch',

}

фЛЃччфИщДфЛЖ

class ProxyMiddleware(object):

def __init__(self, settings):

self.queue = 'Proxy:queue'

# ххЇхфЛЃчхшЁЈ

# self.r = redis.Redis(host=settings.get('REDIS_HOST'),port=settings.get('REDIS_PORT'),db=1,password=settings.get('REDIS_PARAMS')['password'])

self.r = redis.Redis(host=settings.get('REDIS_HOST'), port=settings.get('REDIS_PORT'), db=1)

@classmethod

def from_crawler(cls, crawler):

return cls(crawler.settings)

def process_request(self, request, spider):

proxy={}

source, data = self.r.blpop(self.queue)

proxy['ip_port']=data

proxy['user_pass']=None

if proxy['user_pass'] is not None:

#request.meta['proxy'] = "http://YOUR_PROXY_IP:PORT"

request.meta['proxy'] = "http://%s" % proxy['ip_port']

#proxy_user_pass = "USERNAME:PASSWORD"

encoded_user_pass = base64.encodestring(proxy['user_pass'])

request.headers['Proxy-Authorization'] = 'Basic ' + encoded_user_pass

print("********ProxyMiddleware have pass*****" + proxy['ip_port'])

else:

#ProxyMiddleware no pass

print(request.url, proxy['ip_port'])

request.meta['proxy'] = "http://%s" % proxy['ip_port']

def process_response(self, request, response, spider):

"""

цЃцЅresponse.status, ц ЙцЎstatusцЏхІхЈх

шЎИччЖцч фИхГхЎцЏхІхцЂхАфИфИфИЊproxy, цш

чІчЈproxy

"""

print("-------%s %s %s------" % (request.meta["proxy"], response.status, request.url))

# statusфИцЏцЃхИИч200шфИфИхЈspiderхЃАцчцЃхИИчЌхшПчЈфИхЏшНхКчАч

# statusхшЁЈфИ, хшЎЄфИКфЛЃчц ц, хцЂфЛЃч

if response.status == 200:

print('rpush',request.meta["proxy"])

self.r.rpush(self.queue, request.meta["proxy"].replace('http://',''))

return response

def process_exception(self, request, exception, spider):

"""

хЄччБфКфНПчЈфЛЃчхЏМшДчшПцЅхМхИИ

"""

proxy={}

source, data = self.r.blpop(self.queue)

proxy['ip_port']=data

proxy['user_pass']=None

request.meta['proxy'] = "http://%s" % proxy['ip_port']

new_request = request.copy()

new_request.dont_filter = True

return new_request

All posts

Other pages

Deprecated: цфЛЖ цВЁц comments.php чфИЛщЂ шЊчцЌ 3.0.0 шЕЗхЗВхМчЈяМфИцВЁцхЏчЈчцПфЛЃу шЏЗхЈцЈчфИЛщЂфИх

хЋфИфИЊ comments.php цЈЁцПу in /www/wwwroot/liguoqi.site/wp-includes/functions.php on line 6078

хшЁЈххЄ